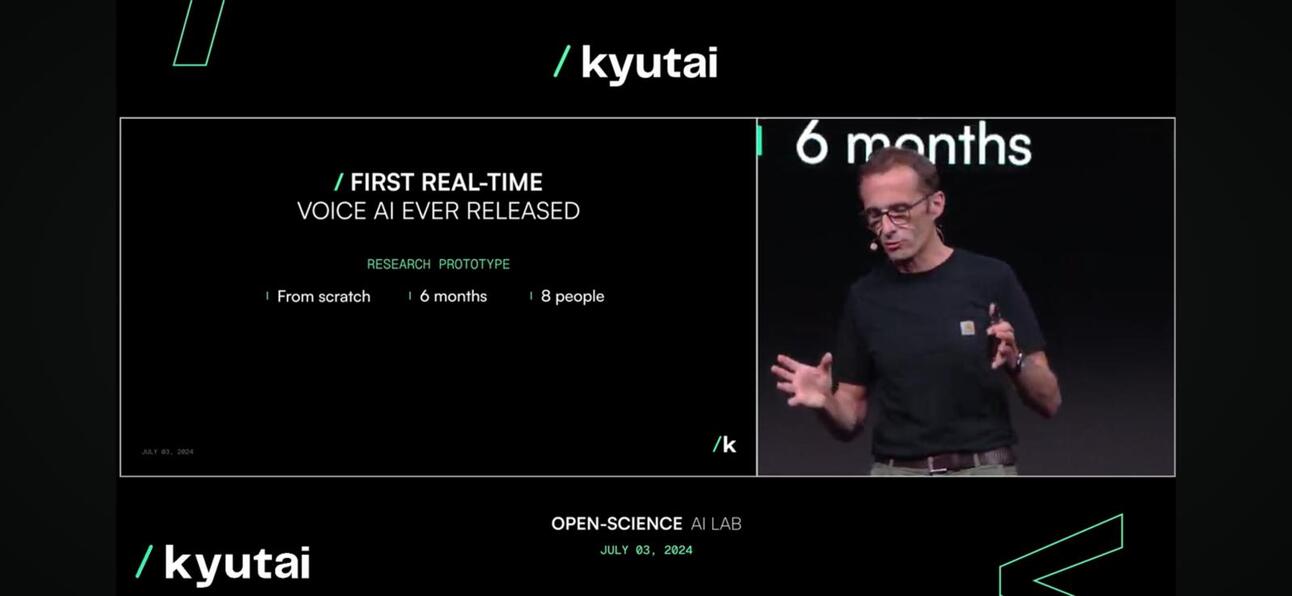

Kyutai unveiled Moshi yesterday, a real-time open-source native multimodal foundation model that can listen and speak, causing an excited uproar in the presentation and online.

Why it’s so exciting?

Moshi is able to understand and express emotions, with different accents for input and output. It can also listen and generate audio and speech while maintaining a flow of textual thoughts. Moshi can also handle two audio streams at once, allowing it to listen and talk at the same time.

Very much closer to a human being (or Skynet).

What’s so novel about this?

- Conversation quality. The tone of voice accounts for 70% of voice conversations, e.g., phone calls, whereas words account for the remaining 30%. The Kyutai team introduced an audio language model that converts audio into “pseudo-words” and predicts the next audio segment from previous audio to carry over a natural conversation to improve 70% of voice conversations.

- Conversation Latency. The maximum latency we can tolerate to have a natural conversation is around 150 milliseconds. As the first release, the Moshi model can deliver a latency between 160ms and 200ms. Not perfect, but considering GPT4o is around 232 to 320 ms, it is pretty remarkable, especially given they are a small team size of 8 FTE.

Moshi, runing in real time

Moshi, runing in real time - Accessibility. The model can run on a device such as a laptop or a mobile phone, which makes it much more accessible to consumers.

model compression to make it available on the edge

model compression to make it available on the edge

So what?

- To a business owner or enterprise, customer interactions are the centrepiece of any business. As most enterprises are developing their AI strategy, leveraging an LLM to improve customer experience over chat is a key part of it, yet text-based. The next phase of this strategy is to improve the customer experience at the voice level, or what’s known as a multi-modality model, which is right over the horizon.

- To a startup, wrapping a closed-source or open-source LLM is no longer going to cut it. How do you embed a voice prompt into your app? Perplexity is introducing a pay feature for voice-based prompts now. It’s not great yet, but it is trending.

- How is this matter to Asia? Asia, especially Southeast Asia, is a non-homogeneous market, and the language and local dialogue differences are key challenges for business expansion. LLM and Voice model is a great way to solve this challenge in helping individuals and businesses navigate the region. The phone makers, especially the Chinese ones, are taking steps to tackle this challenge and opportunity.

How did they do it?

Kyutai developed Helium, a 7-billion parameter language model, and pre-trained Moshi with a combination of synthetic text and audio data from said language model. Delving a little deeper, Moshi’s fine-tuning involved 100,000 synthetic conversions converted with Text-to-Speech (TTS) technology, and its voice was trained on synthetic data generated by another TTS model.

What’s next for Kyutai?

We can expect a technical report and open model versions; future iterations will be refined based on user feedback with a super liberal and permissive licensing strategy to encourage adoption.

To watch the full keynote, please click here.

Lastly, watch out for the East; the Doubao model from Bytedance, which has the richest video and audio content, will be the most formidable player in this space.

Subscribe To Get Update Latest Blog Post

Leave Your Comment: