Introducing Leo’s Takes

Being the ex-CDO of Huawei Cloud, and having built out one of the largest startup ecosystems in the region, I had direct access to:

- Organisation and team who are developing GPU chipsets, LLMs (Large language model) and other LMs (large models);

- Enterprises who are embarking on their AI transformation journey; and

- AI startups from both the East and West building LLM, GenAI tools and applications.

How is this relevant to you?

- If you are a business executive or professional, this article will equip you with a comprehensive view of the current LLM and GenAI tech landscape and practical insights into the underlying risks and opportunities.

- If you are a founder or investor, this article will help you understand the pitfalls and real opportunities in your quest for AI.

Leo’s Takes below are my opinions, derived from a combination of my experience and market due-diligence-study, including workshops, interviews, and discussions with practitioners in the relevant space. These practitioners include research analysts, developers, enterprise executives, and founders, ensuring a well-rounded and credible perspective.

The Current Lay of the Land for GenAI and LLMs

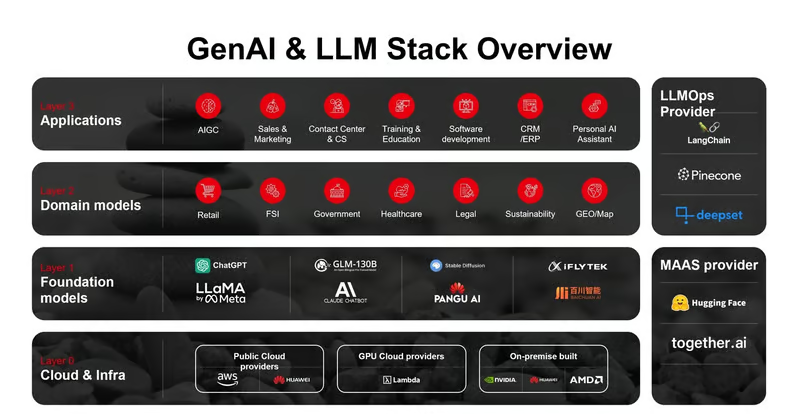

The GenAI and LLM tech stack has stabilised, and each of the following layers has its own business model, challenges, and opportunities. Understanding these layers is crucial for navigating the AI landscape and making informed business decisions.

Layer 0 (Cloud & Infrastructure) – “Where the Money is”

This layer is the bare metal that underpins the entire GenAI stack. It accounts for a staggering 80%+ of the total value of GenAI economy. Although Nvidia is surely dominating, with nearly 95% of the chip maker market (Nvidia beats Apple with 3T Market Cap), the market is attracting other big boys, e.g. Huawei. Jensen Huang identified Huawei as one of the “formidable” rivals to NVIDIA, particularly in the AI chip market. Meanwhile, it also attracts startups, innovators e.g. Grog, who are approaching the market with purpose-built chips, LPUs (language processing unit), that focus on performance and precision of AI inference.

Leo’s Take: The biggest moat that Nvidia has is not just the powerful chipsets but the Cuda ecosystem it built over the years. It supports developers with:

- Different language & APIs;

- Library and framework for different domain tasks;

- Developer tools; and

- Ecosystem partners

While others like Huawei and AMD offer similar products, the usability and richness of their ecosystems are at different scales. This results in developers needing substantially more effort to build models that work with the underlying chip, making the cost of migrating away from Nvidia to other chipmakers a significant hurdle. For a listed company like AMD, the decision to focus on ecosystem development is challenging as it requires long-term investment and won’t yield short to mid-term returns. Huawei, being a non-public company, does have an advantage here, but US sanctions also hinder its progress.

Layer 1 (Foundational Models & LLMs) – “Who can build the biggest bomb?”

The engine of the GenAI stack that powers all GenAI apps that are driven by those who can build out the most capable models, and it happens internationally as well as domestically, especially in the US and China.

- Race between Anthropic and OpenAI – Claude 3.5 Sonnet model outperforms competitor models; and

- Race between closed-source LLM and open-source LLM and the gap is closed with 6 ~ 10 months of lag.

Source: Linkedin

- The “LLM War of Hundreds in China”—the price war between hundreds of LLM makers in China is intensifying. Bytedance offered its model at 10% of the market price at the end of May this year, followed by Alibaba Cloud and Baidu models with similar price points.

And we are starting to see an “AI Cold War” between the West and East.

- OpenAI plans to block API access in China from 9 July, prompting the Chinese LLM makers to offer alternatives to tens of thousands of developers. China implemented Interim Measures for the Administration of Generative Artificial Intelligence Service back in 2023, which raised the bar for foreign LLM (s) development in China.

- China-led resolution on artificial intelligence passes in United Nations General Assembly, it is leveraging soft power to advocate AI development in a ‘free, open, inclusive and nondiscriminatory’ environment, in response to the increasing tension between US and China.

Leo’s Take: The nature of the LLM competition is not just about technology, but also about the “intelligence-being” that is deeply rooted in the culture and language of the country or region. For instance, Singapore’s SGD $70M investment into its National Multimodal Large Language Model (LLM) Programme, known as Sea-lion, is a testament to this.

Technically, the LLM landscape will eventually converge to a handful of models, including closed-source and open-source. The leader, such as OpenAI, may keep a 6 – 12 month leap ahead of others. Open-source models are closing the gap swiftly, given that most enterprises prefer having the option of open-source models, with the desire to retain control and privacy. The polling result at the Economist Intelligence Network AI forum that I spoke to in Singapore last year – shows over 70% of C-levels open to open-source LLMs.

Source: AI Business Asia, The Economist Intelligence AI forum Singapore, 2023

Enterprises are no longer evaluating the “horsepower” of the LLM (s) anymore; they have been accelerating their GenAI transformation with rapid PoCs since the beginning of 2024, although it is still the mainstream for a free PoC or on a budget between $50K ~ $100K. This is creating a “perfect storm” for the above layers and convergence of new layers.

Emerging layers and domain model layer – “The battleground of B2B startups”

Model-as-a-service (MaaS) is a hybrid layer of infrastructure and a foundational model layer. It offers companies easy access to the power of LLMs with pre-trained models and reduces human capital costs. The LLM ops layer is the tooling layer that helps companies streamline the app development process and build domain-specific models. The domain-specific models are purpose trained (fine-tuned) for a specific industry or task.

Leo’s Take: This is the battleground of B2B startups, who are collectively trying to solve one problem – how to build GenAI apps at optimum efficiency?

The journey of developing and operating a GenAI app is complex, and it has attracted thousands of B2B startups coming into this space by 2024, who all believe in the idea of “selling shovels during the gold rush”. Many of them are doing well e.g.Langchain, Flowiseai. However, the emerging layers are volatile spaces, mainly because of the pace of the development of the foundational model (LLM) layer. The boundaries and features of LLM (s) are evolving, which made the early version of LLM obsolete, e.g. GPT3.5 is being depreciated, and new features and capabilities that may overlap with some of these tools are being added.

Cloud service providers are entering the space of MaaS, who offer pre-trained open-source models as an API; in the end, it is a competition of Tokens per Second and associated costs. That being said, players like Huggingface, who built their models and curated a great developer community, are still thriving; for example, it is estimated that Huggingface generated $70M ARR last year. I support this, but I do believe this revenue is largely attributed to its partner AWS instead of the developers based on AI inference revenue share.

So what’s the takeaway here? Of all the five layers, this is my favourite space, and I have immense respect for the builders and founders in this space. They are the ones who create the “multiples” that help the entire ecosystem yield better results and value. Meanwhile, the risk is fairly high for startups and investors, but so is the reward.

So whether you are a founder or investor, I believe the three key success factors (follow this sequence) are:

- Audience first;

- The problem comes second; and

- Finally, your product.

It’s important to note that your audience is not necessarily your customer. In many cases, you may need to grow your audience community first and generate revenue from other sources, as seen in the case of Huggingface.

Layer 3 (GenAI Apps) – “The Need for Speed”

This is the most cutthroat layer of all layers, driven mainly by B2C GenAI apps and tools. a16z published the top 50 GenAI web products; based on monthly visits, it shows over 40 per cent of the companies on the list are new, compared to our initial September 2023 report. This type of turnover bears on the company’s ability and speed to complete the product-market-fit (PMF). This pushes most of these B2C companies to wrap around some well-known LLM (s), such as GPT4 and Llama3, Which investors often criticise for being a wrapper company.

Source: a16z

Leo’s Take: There is no shame in being a wrapper company, as the end product that delights your customer is the one matters the most. As underlying LLMs are becoming a commodity, the way that you orchestrate AI, e.g., latency, workflow integration, and UI, will matters the most. On that front, human agency is at the premium of the product.

I have interviewed over 100 AI startups, from seed-stage to unicorn, between the West (e.g. US & EU) and East (e.g. China & Singapore) since 2023. The two sides show very different approaches to go-to-market; US startups are very product-centric, whereas Chinese startups are a lot more flexible with service. The main reason is that the Asia market demands more customization. This is one of the human agency factors that matters to the success of app companies. For that, I do not mean creating an app that is so customizable that you can scale it, but the ability to quickly turn up an app that is feature-specific and catered for a niche space or audience. China is no longer the world factory you know of, making the consumer products you use daily. It is now gearing up to be the world factory of AI apps, especially given the proliferation of GenAI apps.

Check my post, “A paradigm shift in building a million-dollar startup in less than one year if you are taking the right approach.”

What’s the secret source behind their success?

Impact on Asia Startups and Enterprises

The LLM and GenAI evaluation is by far the fastest-paced technology progression in human history, and it is not slowing down but accelerating. The biggest challenges among the C levels and founders are not choosing the models and apps but addressing the ever-increasing knowledge gaps and building a strategy and execution to ensure that AI works for them.

Asia is a uniquely challenging and promising market, being the most populated continent and non-homogenous. However, it often receives less attention from the AI makers in the US. For instance, OpenAI only opened its first office in Tokyo this year, and the majority of US AI startups I’ve spoken to are solely focused on the US and EU. Huggingface, for example, has only a couple of employees in Asia. This situation presents both a problem and an opportunity for Asia’s founders and enterprises, and it’s crucial to be aware of these dynamics.

Subscribe To Get Update Latest Blog Post

Leave Your Comment: