As we advance into the third year of the Generative AI revolution, artificial intelligence is undergoing a seismic shift. The focus is transitioning from rapid, pre-trained responses (“thinking fast”) to deliberate, reasoning-driven intelligence at inference time (“thinking slow”). This evolution is powering a new generation of agentic applications, reshaping industries and redefining possibilities.

The Stabilization of Generative AI’s Foundation Layer

The Generative AI market has reached a pivotal stabilization phase. Industry giants like Microsoft/OpenAI, AWS/Anthropic, Meta, and Google/DeepMind have fortified their positions within the foundation layer. Backed by significant capital and efficient economic models, these partnerships have made next-token predictions faster, cheaper, and more accessible.

However, as the foundation layer stabilizes, the spotlight shifts to the reasoning layer — the domain of deliberate problem-solving and cognitive operations. This “System 2” thinking goes beyond pattern recognition, focusing on AI models capable of reasoning and decision-making at inference time. Inspired by advancements like AlphaGo, this layer is transforming how AI solves complex, real-world problems.

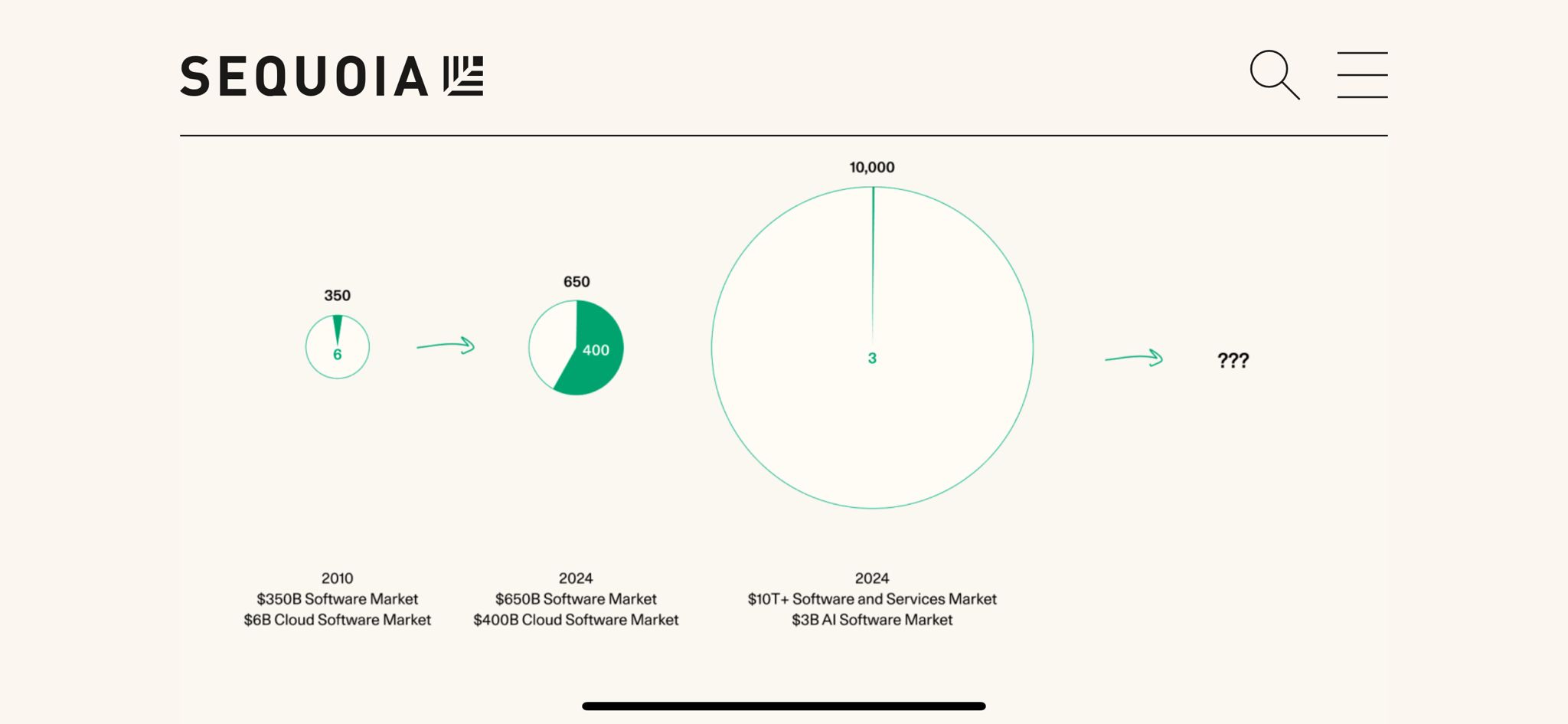

From Software as a Service (SaaS) to Service as a Software

Generative AI is breaking boundaries, leading us from the SaaS model to a revolutionary paradigm: Service as a Software. Here, AI doesn’t just provide tools — it performs the work itself. This shift unlocks a trillion-dollar market opportunity, redefining industries like customer support, cybersecurity, and software development.

Take Sierra, an AI-powered customer support agent. Companies no longer pay for software seats; they pay per resolved issue. This outcome-driven approach epitomizes Service-as-a-Software: delivering measurable results. Similarly, GitHub Copilot has evolved from assisting developers to automating entire coding workflows, and XBOW revolutionizes cybersecurity with continuous AI-driven pentesting.

Reasoning at Inference Time: The Next Frontier

The leap from instinctual responses (“System 1”) to deliberate reasoning (“System 2”) marks the next transformative step in AI. Models like OpenAI’s o1 (Strawberry) are pioneering this shift, introducing “inference-time compute”—enabling models to pause, evaluate, and reason before responding.

This innovation has already revolutionized domains like coding, mathematics, and scientific research. Inspired by AlphaGo’s groundbreaking decision-making framework, these advancements unlock unprecedented cognitive capabilities, paving the way for AI to tackle increasingly complex challenges.

Cognitive Architectures: Tackling Real-World Complexity

While general-purpose reasoning models advance, real-world applications demand domain-specific cognitive architectures. These architectures emulate human workflows, breaking tasks into discrete, logical steps.

For example, Factory’s droids automate software engineering tasks like reviewing pull requests, running tests, and merging code. By combining foundation models with application logic, compliance guardrails, and specialized databases, cognitive architectures create practical, intelligent solutions tailored to industry needs.

Agentic Applications: Redefining the Market

Generative AI’s reasoning capabilities are driving a wave of agentic applications — AI tools that take initiative and deliver tangible results. Examples include:

- Harvey: AI-powered legal assistant

- Glean: AI work assistant

- Abridge: AI medical scribe

- XBOW: AI pentester

- Sierra: AI customer support agent

By reducing the marginal cost of delivering these services, agentic applications make sophisticated tools accessible to businesses of all sizes. For instance, XBOW’s automated pentesting democratizes cybersecurity, enabling companies to conduct regular assessments affordably.

Scaling Inference-Time Compute: The Future of AI

The next chapter of AI innovation hinges on scaling inference-time compute. OpenAI’s o1 model introduces a new scaling law: the more compute allocated at inference time, the better the reasoning capabilities. This shift will drive the rise of inference clouds — dynamic environments that adjust compute resources based on task complexity.

Imagine models capable of reasoning for hours or days. This capability could lead to groundbreaking discoveries in mathematics, biology, and other fields, solving problems once deemed insurmountable. The transition from massive pre-training clusters to agile inference clouds marks a significant milestone in AI development.

Opportunities in the Application Layer

For startups and investors, the application layer offers the most promising opportunities for innovation. While hyperscalers dominate the foundation layer, the application layer enables the creation of domain-specific solutions that address real-world problems with precision.

By leveraging custom cognitive architectures and reasoning capabilities, startups can design tools that integrate seamlessly into workflows, bridging the gap between general-purpose models and practical applications.

Final Thoughts

As AI is changing the world at an incredible pace, so is how it changes the business landscape, e.g., how businesses compete and grow. The reason is that “production cost for making a product or servicing, delivering customer experience” is fractions of what it was. Thanks to AI, it unleashed the “10x” factor.

Here are three personal opinions about the future of business and AI based on my experiences growing GroundAI and serving our clients.

- Evolution of PLG to ALG. Traditional SLG (sales-led growth) and PLG (product-led growth) are no longer as effective, given that the client base has not expanded at the speed of productivity gain. Hence, growth is heavily leaning towards the distribution network. a new approach when it comes to reaching your audience. I called it ALG, audience-led growth. The crux is how you engage your audience effectively on different platforms.

- The acceleration of China tech companies venturing abroad but with a tale of two cities.

B2C or mass B2B SaaS companies ($20 ~ $100/month) , e.g. talkie.ai, runcomfy.com , are taking shape and ranking top 3 in their space. many of these startups is made of a dev team of less than five but powered by very sophisticated SEO and ALG approach. on the side, the big ticket SaaS/Software companies (50K ~ 100 ACV) are still struggling to gain market share mostly due to a problem I called “founder market fit” – the founder can’t localize their mindset to create a consistent execution plan to land & expand outside of China, but many of them are actively hiring overseas talents with attractive offer e.g. up to USD 350K/year - Paradim shift of software-as-a-service to “service-as-a-software”. LLM capabilities are advancing faster than we anticipate, e.g. the reasoning capability of O1 and O3 from OpenAi. However, the “last mile” of delivering these capabilities to business clients is not yet there. This is why we see the proliferation of AI agentic services startups, but sadly, they only solve 20 ~ 30% of business needs. . That’s why most AI startups offer services or make money from “servicing.” Re: my article “Why major AI SaaS is charging $20/month.

Generative AI is redefining the boundaries of software and services, ushering in an era where AI performs the work itself. As we transition from Software-as-a-Service to Service-as-a-Software, the potential to transform industries and create new markets is unparalleled. The focus is no longer on mimicking human intelligence but on reasoning, adapting, and delivering outcomes that reshape what’s possible.

The question now is not whether AI can evolve but how it will redefine the way we work, innovate, and solve the world’s most complex problems.

Subscribe To Get Update Latest Blog Post

Leave Your Comment: