“AI’s next stage is called physical AI. Physical AI is where AI interacts with the physical world. It means robotics.” – Jensen Huang, Nvidia, at a factory opening ceremony in central Taiwan on Jan 16, 2025.

This year, CES was all about AI integration into hardware, from wearables to AI-empowered furniture to robots! Stealing the thunder was not the non-sexy machinery robots that warehouses have all adopted—the fun ones we see in sci-fi movies—but the human-look-alike ones.

We can say robotics are having a ChatGPT ‘moment.” (as in the technology is not new, but the public and capital interest has experienced an awakening)

In particular, last year, we saw how Generative AI has drastically transformed robotics. Robots previously controlled by a remote can now “think” for themselves, integrating AI into the machine in a seamless hardware-software integration. We’re seeing the early days of two vastly different areas of science come together: LLM/Gen AI and robotics.

Just last week, it was reported that OpenAI secretly reopened its robotic division again after shutting it down in 2020. According to the company, their new robotics team will focus on “unlocking general-purpose robotics and pushing towards AGI-level intelligence in dynamic, real-world settings.”

[I will publish a deep dive into Unitree, the leading embodied-AI humanoid, and quadruped robotics firm, in a few weeks, so stay tuned.]

What I found helpful was to use this framework when trying to understand physical AI, to look at the whole thing as a continuous process of three steps:

- Sensing (the eyes, ears, and hands of the robot—sensory technology),

- Thinking (the decision-making process—GenAI),

- and Acting (the ability to follow through and have an impact in the physical world—mechatronics).

In a more technical way of categorizing this, Diana Wolf Torres, an AI writer, has said the system architecture can be broken down into:

1) Perception Layer: This is where the machine processes multi-modal sensory input through advanced sensor fusion algorithms. It helps the computer better understand the physical environment around it.

2) Cognitive Layer: This is the core of how AI integrates into traditional robots. It serves as the machine’s decision-making brain, processing the data collected from its perfection layer based on pre-trained simulations to decide how to react to the physical world.

3) Action Layer: This layer really creates the “output” to the physical world, ensuring accuracy in its movement and where we’ll see the jumping, walking, or object moving.

And physical AI encompasses so much: 1)autonomous vehicles (which I’m working on a piece), 2)Specialized Robots – warehouse/ labor automation (traditionally what we know of), and 3) Humanoid Robots.

Disclaimer: Today, we will only focus on Humanoid robots. I tried my best to make my thoughts logical as I jotted them down and attempted to understand the evolving relationship between robots and AI.

Humanoid Robots

First, what is embodied AI?

Embodied AI refers to the artificial intelligence (AI) agents — robots, virtual assistants, or other intelligent systems — that can interact with and learn from a physical environment. (Qualcomm’s definition)

For many years, we have gotten used to robot arms for warehouse use, coffee-making robots all over Tokyo and Shanghai, robot dogs for fun and delivery purposes, and other such devices. But these robots kind of lost their wow factor.

About ten years ago, a family friend invested in a robot restaurant in Beijing. It was a machine that could chop and make like 10 dishes that were programmed into the machine. My mom thought it was so cool, but the robot didn’t look like a human, just another machine, so the novelty didn’t work on me (I’m pretty sure they closed the shop after a year). The dishes tasted fine, but cooking isn’t science; you need to feel the fire and how much salt to add. Sometimes, you need to jazz it up and improvise. The more suitable use case is for a big university/ campus cafeteria, factories, or prisons where you need to provide standardized food on a mass scale efficiently. It just didn’t work as a mid-range priced restaurant in the CBD of Beijing. There are many other better options to eat there than a meh-tasting robot-made food.

Now, let’s introduce humanoids, robots that are physically designed like humans. They have two arms, two legs, and a head, and their mobility mimics that of our bodies.

“The easiest robot to adapt in the world are humanoid robots because we built the world for us,” Jensen Huang said at Computex, adding: “There’s more data to train these robots because we have the same physique.”

And I agree with him. Our obsession with human-looking robots is now beyond how egotistic and self-important we are as humans. It’s an optics thing but also a practical thing. So now it’s sounding more and more like I, Robot.

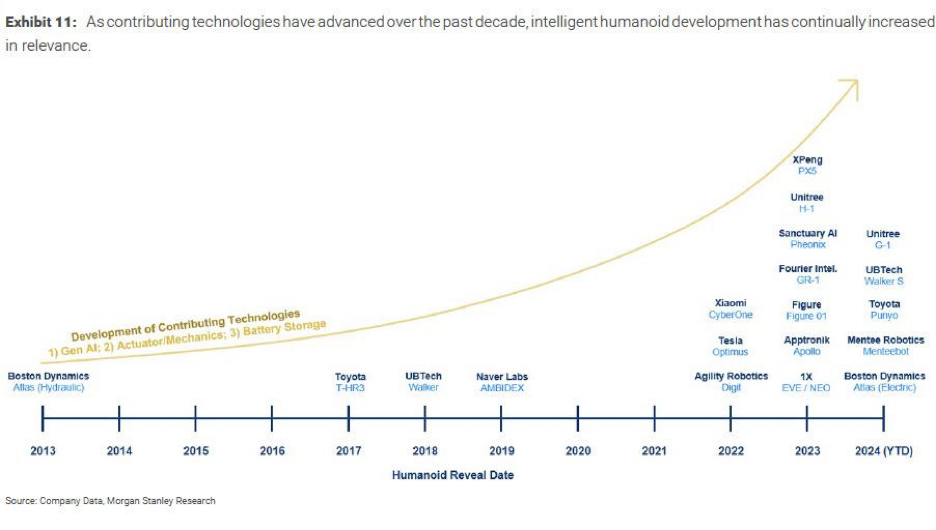

The mainstream interest in humanoid robots started in late 2022 when Telsa unveiled its Optimus. Since then, many robotics companies have received attention from the media and investors worldwide. This became the new “wow” robot form.

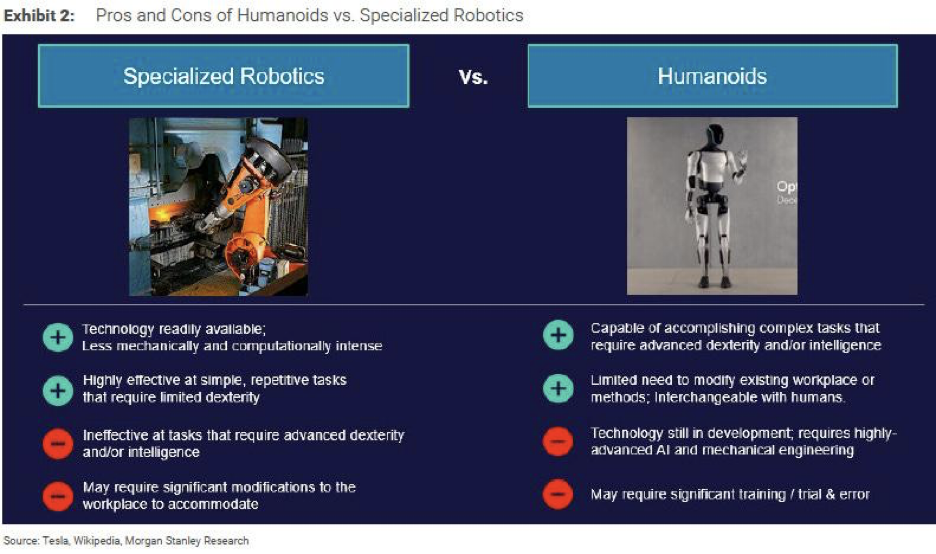

How are they different from traditional robots?

Traditional robotics has been around for over a decade. These conventional robots relied on rigid programming to execute pre-written instructions (following a set of orders and could not “think”). Traditional robots were essentially limited to repetitive, pre-programmed motions with little flexibility, and the complexity of tasks they can handle is constrained. Whereas embodied AI robots are trained on real-world data that uses reinforcement learning and they have developed the ability to “think.”

In October 2024, during a video call at a tech conference in Riyadh, Elon Musk predicted that by 2040, there would be at least 10 billion humanoid robots priced between $20,000 and $25,000 through a video call at a tech conference in Riyadh. While we are still far from that, we are one step closer to robots becoming more accessible/ prevalent with the frontier robot models from Ameca, Tesla, Agility Robotics, Unitree, and so on.

In his most recent AGM, Musk, the Tesla CEO, predicted that humanoids will outnumber humans by two to one or more in the future. That is wild (and scary).

And just today, David Soloman, CEO of Goldman Sachs, said that AI will revolutionize business productivity. His view is that eventually, much of what the junior bankers do will be replaced by AI, such as drafting 95% IPO filing documents, which can now be completed by AI in minutes, “the last 5 per cent now matters because the rest is now a commodity.”

Executives are looking at this revolution and really thinking that AI robots will replace (assist) us one day.

Development and Limitations

Nvidia’s keynote speech in March 2024 also focused on physical AI- robotics. The advancement of robots relies on three main factors: 1)GenAI, 2) actuators and mechanics, and 3) battery storage (which I’ve also touched on before when writing about renewable energy solutions here)

If the three factors can progress together, the mass scaling and commercialization of humanoid robotics will likely be in a few years. Now, in the Unitree piece, I talk about China, given its long experience in hardware manufacturing, which has given it a know-how advantage in mechatronics, which combines mechanical technology and electronics, given it being the hardware manufacturing hub for various appliances over the last few decades. While the U.S. is still obviously leading in GenAI, the question is which factor matters more, or will there be an intention for collaboration? And who can figure out the last piece? A better battery solution. Can they make batteries lighter, last longer, and be safer?

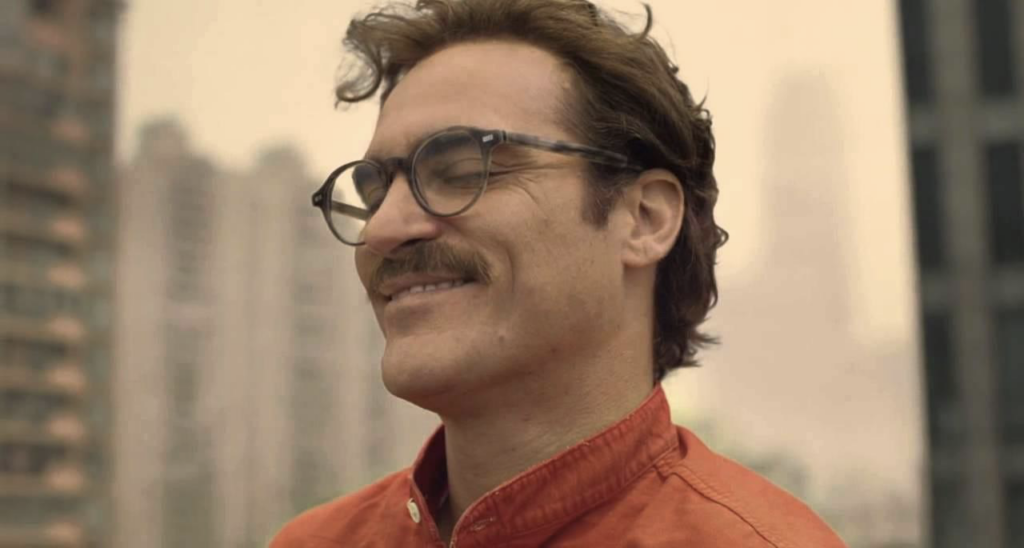

We humans have been dreaming of a world where robots are side-by-side with us for decades, from the movie Her in 2013, where we imagined a virtual AI lover (where that can practically be real now), to the 2024 sci-fi thriller featuring Megan Fox Subservience where physical AI challenges our morals of faithfulness, loyalty and even leads to detrimental safety risks. The imagination has always been that we will have robotic selves created one day. It’s just that now, the technology has finally advanced enough to catch up to our imagination. (obviously, the current humanoids look nothing like Megan Fox yet)

A robot that falls in love with the human and turns evil in the movie /Subservience/

Human protagonist that falls in love with an AI voice agent in the movie /Her/

So, to my point, this is obviously just the beginning. As resources now all go to physical AI (or AI in general), this interdisciplinary field will mature out of its nascent stage and continue to advance faster than maybe we can even comprehend. As our physical and digital worlds continue to mold and blend into each other, the safety concerns really worry me as a mother of a 2-year-old. If smartphones are already confusing children about reality, how should we regulate this technology, and what will robots be capable of when she reaches her teenage years?

There is still a lot to unpack, so I will add a new vertical to AI Proem: Physical AI. In this vertical, I will explore the many areas that may affect the development of Physical AI. First, I will do a deep dive into China’s leading humanoid robot company, Unitree Robotics, which is often compared to Boston Dynamics. I will also explore the battery solution angle, which I have been trying to find time to look into after my renewable energy piece.

Then, I think it will be interesting to explore the concerns around possible theories on how robots will go rogue. This concerns overall robotics safety and the dexterity of robot machines. Then, there are the technicalities of connectivity, bandwidth, latency complications, and other key components—sensors (LIDAR), sensor fusion, and software architecture technology. This is also related to another work-in-progress piece that will examine how EV companies are transitioning to AI-first companies following Tesla’s footsteps (Li Auto, for example).

I also want to expand my coverage to include how AI will affect our workstream, so I am exploring how our labor force will be impacted by physical AI adoption and potentially then investigating and coming AI software or how AI will affect our most popular internet-era software platforms.

There is just so much to learn and write about! For now, toodeloo~

Thanks for reading AI Proem! Subscribe for free to receive new posts and support my work.

Subscribe To Get Update Latest Blog Post

Leave Your Comment: