From computers to machines that do the work for you — how far have we really come?

And yet, there’s still a whole new world of AI waiting to unfold.

“AI’s next stage is called physical AI. Physical AI is where AI interacts with the physical world. It means robotics.”

– Jensen Huang, Nvidia, Jan 16, 2025

Physical AI is the stage we aren’t quite ready for — but then again, were we ever really prepared? ChatGPT showed up, and we adapted.

Physical AI will be no different.

That’s the thing about humans — we adapt.

In this blog, we’ll explore:

- What is Physical AI

- What is Traditional AI

- What is Generative Physical AI

- The key differences between the two

Let’s dive in if you’re even a little curious (which I know you are).

What is Physical AI?

Physical AI is artificial intelligence that can sense, move, and interact with the physical world through machines like robots and self-driving cars.

It combines smart decision-making with real-world actions — basically, AI with a body.

Evolution of AI

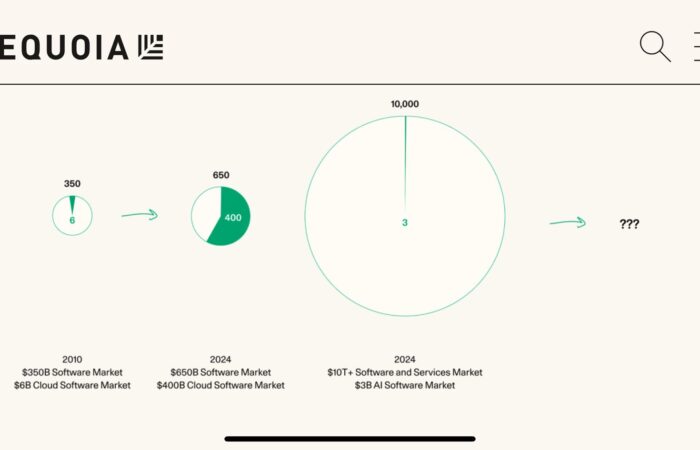

For over 60 years, we lived in the era of Software 1.0 — code written by humans and run on CPUs.

Then came Software 2.0 — where machines started learning from data using neural networks, powered by GPUs.

That’s what gave rise to what we now call Generative AI — the kind of AI that can write, draw, design, and even chat with you.

But now, we’re stepping into a new stage: Physical AI.

And no, it’s not just more software — it’s AI that can move, see, sense, and interact with the real world.

Let’s break it down.

Generative AI is the brain.

Physical AI? That’s the brain + body.

→ Generative AI can write you an email.

→ Physical AI can deliver your groceries.

It’s AI with hands, wheels, eyes — and a whole lot of smarts.

So, what does Physical AI really do?

Physical AI is designed to interact with humans and the environment.

- It powers robots that assist in surgeries

- Self-driving cars that can navigate traffic

- Smart vacuum cleaners that figure out where to clean

- And even machines in factories that can assemble products faster than humans.

It’s not just automation. It’s intelligence in motion.

How does Physical AI work?

To function in the real world, physical AI relies on two main components:

Source: Eye for Tech

- Actuators:

These are like muscles:

- Wheels

- Robotic arms or legs that help a robot move or lift things.

- Sensors:

These are the eyes and ears, like:

- Cameras

- Radar

- Microphones — that help the machine “see” and respond to what’s around it.

The AI takes in this sensory information, processes it, and then acts based on what it learns.

How it all comes together (NVIDIA’s approach):

- Training starts on DGX computers – where models learn through data.

- Then, it’s fine-tuned using reinforcement learning in a simulated environment called Omniverse.

- Finally, the trained AI is deployed on Jetson AGX computers — the brains inside real-world robots.

Real-Life Examples of Physical AI

- Roomba (by iRobot):

An AI-powered vacuum that learns your floor plan, avoids obstacles, and cleans your house — even while you’re not home.

- Da Vinci Surgical System (by Intuitive Surgical):

A robotic system that assists surgeons in performing precise, minimally invasive procedures, improving accuracy and reducing recovery times.

Physical AI is already reshaping how we live and work.

From healthcare to logistics, from our homes to our highways — it’s AI stepping out of the screen and into the real world.

How Traditional AI Works

Traditional AI is the type of AI that works mostly with data, logic, and rules — it lives in computers and helps with thinking, not doing.

It powers things like voice assistants, recommendation systems (like Netflix or Amazon), and fraud detection tools.

Let’s understand it with an example:

Imagine you’re from a telecom company.

You’ve got loads of customer data sitting in a repository — their usage, billing history, complaints, etc.

Now, let’s say you want to find out which customers might cancel their service soon (aka churn).

Here’s what you do:

- You move that data into an analytics platform

- You build predictive models that tell you:

“Hey, these customers might leave.” - Then you plug those models into an application that helps you take action, like offering discounts or sending reminders to keep them around.

At this point, it’s not full-blown AI, it’s just predictive analytics.

But if you add a feedback loop — where the system learns from past decisions (who actually stayed, who left despite offers), that’s when it becomes AI.

So, the more it sees what works and what doesn’t, the smarter it gets over time.

How is This Different from Physical AI?

Traditional AI works in digital spaces.

It:

- Thinks

- Predicts

- Analyzes

But it doesn’t move, see, or touch anything.

Physical AI, on the other hand, takes things a step further.

It:

- Thinks and Acts

- Senses the real world using cameras, sensors, etc.

- Moves around and manipulates physical things using motors, wheels, or robotic arms

So while traditional AI might predict which customer will cancel,

Physical AI could be the robot delivering a router to that customer’s doorstep or a smart machine fixing network issues on-site.

How is Generative AI different from Traditional and Physical AI?

Generative AI is designed to create new content.

It learns from huge amounts of data (text, images, audio, etc.), understands the patterns, and then produces brand new versions of that content.

For instance, ChatGPT writes blog posts or answers your questions

Let’s think of AI like stages of human ability:

- Traditional AI is like someone who can analyze data and make smart decisions.

- Physical AI is like someone who can analyze, think, and also move, like lifting, navigating, or assembling things.

- Generative AI is like someone who can create new stuff — write poems, draw pictures, generate code, or make music.

In short:

→ Traditional AI is smart.

→ Generative AI is creative.

→ Physical AI is both smart and physical.

Where Traditional AI Falls Short in the Physical World

Traditional AI is great at thinking with data, but it struggles when it steps outside its comfort zone — the digital world.

It works on predefined rules and patterns.

So, if something unexpected happens, it doesn’t know how to handle it.

Let’s understand this with examples.

Imagine you have a food delivery app that uses AI to recommend your next meal.

It looks at your past orders and suggests something similar, like pizza, if you often order Italian.

Now, let’s say there’s a massive traffic jam in your area, and deliveries are delayed.

Will the AI know to suggest something faster to cook at home, or give you a warning?

Nope — because it wasn’t trained to handle real-world conditions like that.

It only knows your data, not what’s physically happening outside.

Another example:

Say traditional AI is used in a factory to detect defects in bottles.

It’s trained on images of broken or cracked bottles.

But what if a new kind of defect shows up — like a label slightly misprinted or a bottle slightly tilted?

If the AI wasn’t trained on that exact issue, it might miss it completely.

Why?

Because traditional AI can’t adapt on the fly.

It doesn’t see, feel, or move.

→ It only understands what it has already seen in data.

Anything beyond that? It’s confused.

That’s where Physical AI comes in.

It can:

- Sense the environment

- Adjust to unexpected situations

- Act accordingly.

Generative Physical AI: A New Frontier

Generative AI + Physical AI = a game-changer.

This new form is called Generative Physical AI, and it’s where things start to feel really futuristic.

So, what exactly is it?

Let’s understand.

What Makes It “Generative”?

Unlike traditional robots that follow a set of fixed instructions, Generative Physical AI can learn, adapt, and come up with new physical responses on its own.

It’s not just reacting — it’s thinking and doing, creatively.

Let’s break it down with an example:

Imagine a robot helping you clean your room.

- A traditional robot might just vacuum the floor in straight lines.

- But a Generative Physical AI robot could:

- Notice your socks under the bed

- Figure out how to pick them up

- Fold your blanket

- Even suggest organizing your books — all without being told exactly how to do it.

Why?

Because it learns from how you live, adapts to your routines, and responds in smarter, more helpful ways — even in situations it hasn’t seen before.

In short:

- It learns like Generative AI

- It acts like Physical AI

- And it adapts like a human

That’s what makes it a new frontier.

We’re no longer just teaching machines to do — we’re teaching them to think and move creatively in the real world.

Applications of Generative Physical AI

Now that we know what Generative Physical AI is, let’s look at where it’s being used. And honestly? It’s pretty cool.

- Smart Robots in Warehouses:

These robots don’t just lift boxes, they:

- Figure out the best way to move them

- Avoid obstacles

- Even adjust their paths if something changes.

For example. Proteus Robot by Amazon.

It’s an autonomous warehouse robot that can navigate busy spaces, detect obstacles (including humans), and adjust its movement on the fly — all without needing fixed paths.

- Human-like Machines in Healthcare:

Imagine a robot that assists doctors during surgery — not just by holding tools, but by adjusting its actions based on the doctor’s hand movements or changes in the patient’s condition.

It learns how to work with humans, not just for them.

For instance, the Da Vinci Robot by Intuitive Surgical.

This robotic system helps doctors perform minimally invasive surgeries.

It can mirror and enhance the surgeon’s movements, providing precision and real-time adjustments during delicate procedures.

- Adaptive Tools in Factories:

These machines can detect when something’s off in the production line — like a loose screw or a faulty part — and fix it without waiting for human input.

They adapt in real-time, improving efficiency and reducing mistakes.

For instance, Tesla’s AI-powered robotic arms.

These robots:

- Perform repetitive tasks like welding or assembly

- Use sensors and AI to adapt to unexpected changes on the production lines.

For instance, it catches a misaligned part and corrects it on the spot.

Physical AI vs Traditional AI: Key Differences

| Aspect | Traditional AI | Physical AI |

| Analogy | Brain | Brain + Body |

| Presence | Disembodied (exists only in software or digital platforms) | Embodied (exists in physical machines and robots) |

| Interaction | Works with data and digital inputs | Interacts with the physical world through sensors and movement |

| Adaptability | Follows pre-defined rules | Learns and adapts in real-time |

| Example | Predicting customer churn | Self-driving cars avoiding obstacles |

| Main Focus | Decision-making and predictions | Decision-making and physical actions |

| Safety Application | Limited to digital environments | Crucial for real-world use cases like autonomous vehicles |

You see:

- Traditional AI is like a smart assistant on your computer.

It can help with answers, automate tasks, and make predictions based on patterns.

- Physical AI is like that assistant stepping out of the screen.

Driving your car, helping in factories, or even assisting in surgery — it blends thinking with doing.

The real beauty of Physical AI lies in its real-time adaptability.

While traditional AI waits for instructions or follows rules, physical AI can react, adjust, and move, just like humans.

Conclusion: The AI Journey Isn’t Over Yet

From software that thinks to machines that move, AI has come a long way.

And yet—this is just the beginning.

- Traditional AI changed how we process data.

- Generative Physical AI is now changing how machines interact with the real world.

But just like ChatGPT wasn’t the end of the AI road, Physical AI won’t be either.

There’s always going to be a “next stage.”

That said, one thing still remains on top: human intelligence.

Even in a world of intelligent machines, 88% of consumers say human interaction is still essential to them when dealing with businesses (PwC, 2023).

That’s why the smartest move you can make isn’t choosing between AI or humans—it’s combining both.

At AI Business Asia, we blend the power of AI with sharp human insight to help your business grow effortlessly.

From content to strategy to automation—we’ve got you covered.

Ready to grow your business with ease?

Let’s get smart—together.

Subscribe To Get Update Latest Blog Post

Leave Your Comment: