OpenAI’s o3 and o4-mini are more than just the next models—they mark a big step forward in multimodal reasoning.

These new models are built for multimodal reasoning, meaning they can understand and process different types of data (like text, images, and more) to solve complex problems.

OpenAI’s o3 can make up to 600 tool calls in a row when tackling a tough challenge, showing just how far reasoning in AI has come.

What makes o3 and o4-mini even more impressive is their efficiency.

They don’t just perform better—they do it faster and at a lower cost.

Since GPT-4, OpenAI has reduced per-token pricing by 95%, making powerful AI more accessible for real-world use.

In this blog, you’ll discover:

- What makes o3 and o4-mini powerful and efficient

- How these models handle complex tasks using tool calls

- And how you can build context-aware multimodal reasoning applications using generative AI on AWS

If you’re looking to understand what’s new, what’s possible, and how to leverage these tools for real-world impact, this blog is for you.

What is Multimodal Reasoning?

Multimodal reasoning is the ability of AI systems to understand and process multiple types of data, like text, images, audio, and video—at the same time, so it can make smarter and more accurate decisions.

Let’s understand this with an example.

Imagine you’re trying to understand a story—but instead of just reading it, you also see pictures, hear voices, and maybe even watch a short video.

All these different types of information help you understand the story better, right?

That’s exactly what multimodal reasoning is all about.

It’s when AI doesn’t just look at one kind of data (like just text), but learns to understand and connect multiple types of data—like text, images, audio, or even video—all at once.

Why is this important?

Because in the real world, we don’t communicate using just one format.

- We speak

- We write

- We share photos, videos, voice notes—and for AI to truly help us, it needs to make sense of all of that together.

With multimodal reasoning, AI can do things like:

- Look at an image and describe what’s happening in it

- Read a document and analyze the chart shown inside it

- Watch a video and answer questions about it

It’s a huge step forward in making AI more helpful, more human-like, and more capable of handling real-world tasks.

OpenAI’s o3 and Its Role in Multimodal Reasoning

You’ve probably heard about OpenAI’s o3 and o4-mini being called “reasoning models.”

What does that mean?

Think of it like this:

These models don’t just spit out answers right away.

They think, just like a person would when solving a tricky problem.

- They pause

- Weigh the options

- Then respond with something more thoughtful and accurate.

What they’re great at:

- Solving multi-step or layered problems

- Answering research-heavy or deep-dive questions

- Brainstorming fresh, creative ideas

What’s changing?

OpenAI is phasing out older models like o1 and o1 pro (if you’re on the $200/month Pro plan).

They’re getting replaced by o3, which is now one of the smartest models OpenAI has released.

It brings more advanced reasoning skills to the table and can handle complex tasks better.

Performance-wise:

- o3 is smarter and more capable than o1 and o3-mini.

- But when it comes to coding benchmarks, o4-mini takes the crown — scoring 2719, putting it among the top 200 coders in the world.

- In multimodal reasoning (where it interprets text, images, etc.), o3 scored 82%, just slightly better than o4-mini at 81%.

Openai’s o3 and o4-mini pricing:

So, depending on your task, either one could be better.

Real-World Example: o3 in Action

Let’s say you’re chatting with o3, and you’ve enabled the memory feature (you can turn it on in settings). Now, it remembers your past convos.

Here’s what Skill Leap AI tested:

They asked o3: “Based on what you know about me, can you share something in the news today that I’d find interesting?”

And o3 actually nailed it.

It:

- Used memory to recall past chats

- Searched the current news

- Applied reasoning to figure out what the user might like

Then it explained its reasoning:

“I picked this because most of our past chats are about AI and content creation, which you’re into.”

And guess what? Skill Leap AI confirmed — ChatGPT knew them pretty well.

Meet o4-mini: Lightweight, Yet Powerful

Let’s talk about o4-mini—OpenAI’s latest reasoning model that’s small but mighty.

If o3 is the deep thinker, o4-mini is the speedster.

It’s designed to give you quick, smart answers without skipping the reasoning part.

Think of it as the model you call on when you want fast and sharp replies.

Extra Powers That Come With o4-mini

Just like o3, o4-mini has access to all the cool tools:

- It can search the web when needed

- It uses memory to recall your previous chats and personalize its responses

- You can upload documents or images, and it’ll analyze them

- Need an image? It can generate one

- Great at visual reasoning, math, and code

Real-World Example: How Smart Is It, Really?

Test 1: Prediction question

Skill Leap AI asked o4-mini:

“Make a prediction for the tariff level between the US and China in June 2025. Give a clear answer in 2–3 sentences.”

Instead of making random guesses, o4-mini stayed grounded, saying that without any new agreements, tariffs would likely stay at the current 145%.

→ Smart move—it didn’t overreach or make false claims.

Test 2: A tricky math puzzle

Question: A horse costs $50, a chicken $20, and a goat $40. You bought 4 animals for $140. What did you buy?

→ o4-mini not only solved it but also gave two possible answers, showing its reasoning power in real-time.

When Should You Use o4-mini Over o3?

Here’s when o4-mini shines:

- Speed matters – It gives faster responses than o3.

- You’re on the go – It’s lightweight and perfect for edge deployments.

- You need quick logic or visual analysis – Like solving puzzles or analyzing images.

- You’re coding – It’s super efficient at code generation and problem-solving.

In short, o4-mini = fast + smart + lightweight

Right now, it’s the best model for coding, visual tasks, and edge-based use cases.

→ If you want speed and solid reasoning, o4-mini is your go-to.

Generative AI on AWS: Building Context-Aware Multimodal Reasoning Applications

Now that we have powerful models like OpenAI’s o3 and o4-mini, the next question is—how do you use them to build smart apps?

This is where AWS (Amazon Web Services) comes in.

How AWS Helps

AWS gives you the infrastructure, tools, and cloud services you need to:

- Run large AI models like o3 and o4-mini

- Store and process data (text, images, audio, etc.)

- Build applications that understand the context—like what a user wants, what’s happening in the conversation, or what’s shown in an image

- Scale your apps easily as more people use them

AWS Tools That Make It Easy

Here are some AWS tools and services that help developers build multimodal reasoning applications:

- Amazon SageMaker – To train and deploy machine learning models

- AWS Lambda – For running code automatically without needing servers

- Amazon S3 – For storing files like images, audio, and documents

- Amazon API Gateway – To connect your app to the AI model

- Amazon Bedrock – For using foundation models from providers like OpenAI

- EC2 (Elastic Compute Cloud) – For running heavy workloads if needed

Example Use Case: A Smart Medical Assistant

Let’s say a healthcare company wants to build a smart assistant using OpenAI’s o3 on AWS.

Here’s how it could work:

Step 1: A doctor uploads a patient’s X-ray image and symptoms into the system.

Step 2: The app (powered by o3) looks at both the image and text and gives a possible diagnosis.

Step 3: AWS handles all the heavy lifting—storing the files (S3), running the model (SageMaker), and responding instantly (Lambda + API Gateway).

This is context-aware multimodal reasoning in action—and it’s made possible by combining OpenAI’s models with AWS.

Why OpenAI’s o3 and o4-mini Are Game Changers?

OpenAI didn’t just update its models — it launched a whole new level of smart.

The o3 and o4-mini models are more thoughtful, more accurate, and just better at solving real-world problems.

Whether you’re coding, analyzing visuals, brainstorming content, or just chatting, these models can think things through in a much more human-like way.

Let’s Break It Down: o3 vs. o4-mini

| Feature | o3 – The Bigger, Brainier Model | o4-mini – The Fast, Efficient Multitasker |

| Performance | Great at deep reasoning, complex coding, science, and math problems | Super quick, handles everyday tasks with ease |

| Visual Skills | Excellent at understanding and analyzing images, graphs, and charts | Strong at visual tasks for its size — fast and sharp |

| Accuracy | Makes 20% fewer major mistakes than older models | Very reliable for a lightweight model |

| Speed | Slower than o4-mini, but more thoughtful and thorough | Fastest model for reasoning and real-time responses |

| Use Case | Ideal for research-heavy, multi-step thinking, and detailed projects | Perfect for customer support, high-volume tasks, and quick turnarounds |

| Memory & Personalization | Remembers past chats to give more personalized answers | Also uses memory to keep replies relevant and efficient |

| Cost | Premium model — more powerful but pricier | More budget-friendly and scalable |

What They Both Do Exceptionally Well

- Better context & memory: They remember previous chats, so the responses feel more personalized and connected.

- More natural replies: Conversations feel smoother and more human.

- Follow instructions better: You ask, they get it, and deliver with less back-and-forth.

- Image “thinking”: Upload a sketch, chart, or even a blurry whiteboard — they can understand it, analyze it, and help you work through the problem. Yes, even rotating or zooming in when needed.

What Are The Real Benefits for Businesses & Developers

Here’s why o3 and o4-mini are a big win:

- Developers can debug code, analyze screenshots, and even ask for help with system design

- Teams can automate smarter, more personalized workflows

- Marketers and content creators can brainstorm sharper content ideas, with AI that “gets” context

- Customer service becomes faster, smarter, and more scalable with o4-mini’s high-speed reasoning

OpenAI’s o3 and o4-mini aren’t just smarter — they’re also more practical.

They think better. Understand better. And adapt better.

Whether you want deep thinking with o3 or fast, flexible help with o4-mini, these models are changing how we work, create, and problem-solve with AI.

Big brains. Quick moves. Real results.

What Does The Internet Have to Say About This New Launch?

After going through tons of real user reviews and hands-on testing, here’s what folks say about OpenAI’s o3, o4-mini, and how they compare to other models like Gemini 2.5 or Claude.

o4-mini: Great at Math and Coding (But That’s Its Main Thing)

Think of o4-mini like a math nerd who’s laser-focused on algorithms, coding, and solving technical problems.

Maths and Coding:

O4-mini is a beast who, at times, sleeps.

o3 is like that smart friend who’s good at everything—knows a bit of coding, some history, and can hold a great conversation.

Users say:

- It’s better for general tasks, creativity, and mixed-topic reasoning

- More likely to understand context-heavy or multi-layered questions

- Sometimes hallucinates answers or makes things up confidently

Bottom line: Great for tasks where you need someone with broad understanding, not just a specialist.

People say about o4-mini:

- It’s excellent at real-world programming tasks

- It gives deep, well-thought-out solutions for coding problems

- It “thinks before it answers,” like planning before speaking

But… - It struggles with following instructions repeatedly

- Sometimes skips code blocks or says “// your snippet goes here”

- For basic coding tasks, some still prefer o3

In short: If you need a focused coding buddy, o4-mini is your go-to.

But don’t ask it to write you a poem or explain a design diagram—it might miss the mark.

OpenAI’s o3 vs o4-mini – How to Choose?

Here’s a simple way to think about them:

- Use o4-mini for tasks that are math-heavy, logic-based, or coding-focused

- Use o3 for tasks that require common sense, broad reasoning, or creativity

Like someone said:

“o4-mini is like a guy who’s amazing at math because he has no other hobbies. o3 is like a super curious polymath who’s good at a lot of things.”

How Do They Compare to Other Models?

- Gemini 2.5 is still beating o4-mini for many users in accuracy and diagram understanding

- Claude 3.7 and others like GPT-4 Omni (GPT-4o) are seen as good all-rounders too

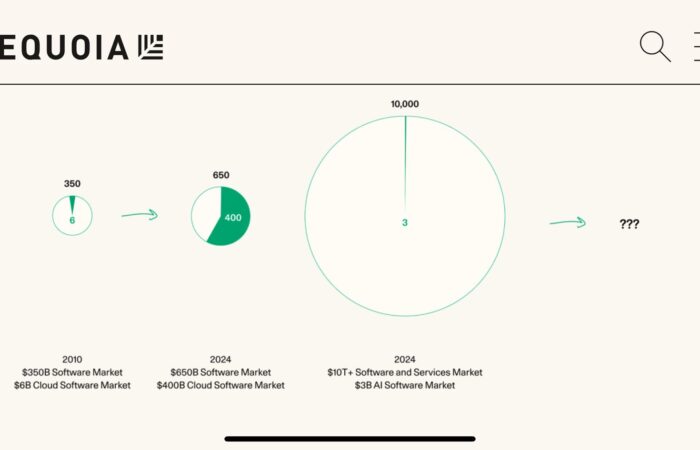

The Bigger Picture: Insane Progress in Just 2 Months!

Some users are blown away by how fast AI models are improving. In just a couple of months:

- We’ve seen multiple “kings” like Claude 3.7, Gemini 2.5, and now GPT-4-mini

- People are dreaming of AI that can do its own research, write papers, and even help us get closer to AGI (Artificial General Intelligence)

Conclusion

OpenAI’s o3 and o4-mini are clear game changers in the world of AI.

From sharper context understanding to faster response times, they’re revolutionizing multimodal reasoning — helping AI understand not just words, but also:

- Images

- Charts

- Complex patterns across formats.

Whether you’re building long-form content, solving tough math, or analyzing visuals, these models step up in a big way.

But here’s the real talk:

Even with all these improvements, they’re still not perfect.

Like their older siblings, o3 and o4-mini can hallucinate — meaning they sometimes give confident answers that aren’t true.

So don’t get lazy.

Always fact-check, cross-verify, and remember that nothing beats the power of a thoughtful human mind guiding the process.

As we move forward, tools like OpenAI’s o3, combined with the scalability of generative AI on AWS, open doors to building context-aware multimodal reasoning applications at scale.

It’s the perfect time to explore how these models can fit into your workflows, platforms, or businesses.

The future of generative AI is here — and it’s fast, visual, and full of potential.

Just make sure you stay smarter than the tech you’re using.

Subscribe To Get Update Latest Blog Post

Leave Your Comment: