This year has marked a turning point in the AI industry, with transformative advancements redefining how we work, create, and innovate. Leading this wave of progress are ChatGPT-4 Turbo, Gemini 2.0, Claude 3.5, and Gwen 2.5—models that have set new benchmarks for conversational and multimodal AI.

These aren’t just updates; they’re game-changing innovations that bring unique capabilities to the table. Whether you’re a business professional, a creative, or simply curious about the future of AI, this comparison unpacks their features, breakthroughs, and ideal applications to help you make an informed choice.

The Evolution of Models

ChatGPT: From 3.5 to 4 Turbo (GPT-4o)

GPT-3.5 (2022):

- Improved Contextual Understanding: GPT-3.5 introduced a significant leap in contextual accuracy and response quality compared to GPT-3. This version was praised for its ability to produce coherent, human-like text with minimal errors, revolutionizing conversational AI.

- Widespread Adoption: Its affordability and accessibility made it a favorite among businesses and individuals. It enabled practical use cases like customer service automation, content creation, and personalized educational tools.

- Mainstream Success: GPT-3.5’s ease of use set the stage for mainstream adoption of AI, becoming the go-to solution for organizations looking to streamline operations and improve efficiency.

GPT-4 (March 2023):

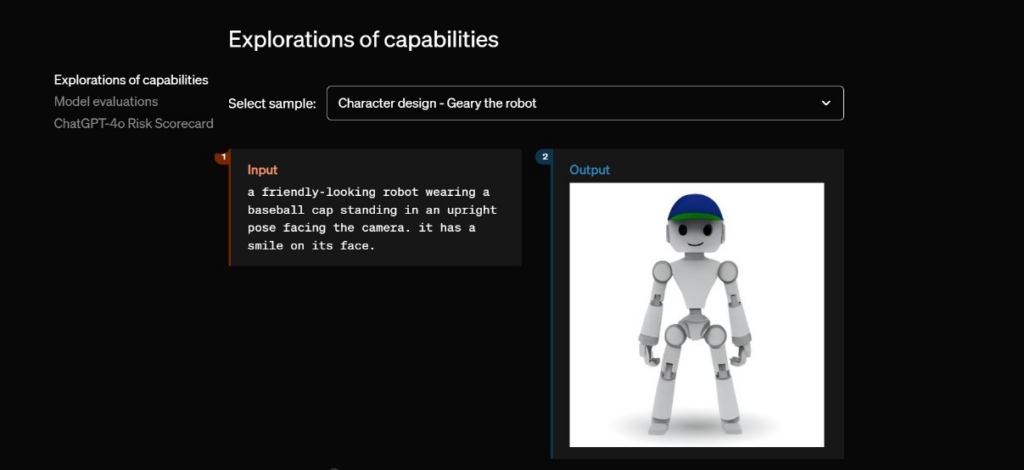

- Multimodal Capabilities: GPT-4 introduced the ability to process both text and images, broadening its range of applications. For example, it could analyze visual data, generate image descriptions, and combine text with visual elements in workflows.

- Improved Reasoning: Enhanced reasoning abilities allowed GPT-4 to handle more complex queries and deliver precise, contextually rich responses, making it ideal for research, education, and creative writing.

- Expanded Context Window: With support for up to 32k tokens, GPT-4 enabled longer and more coherent conversations. Users could now process detailed reports, lengthy documents, and intricate project plans with ease.

- Adoption Across Industries: Businesses leveraged GPT-4 for drafting reports, automating marketing campaigns, and creating tailored customer experiences. Educators used it to design personalized learning journeys, while creators found it invaluable for content generation.

GPT-4 Turbo (Late 2024):

- Larger Context Window: GPT-4 Turbo pushed the context limit to 128k tokens, making it capable of processing extensive documents, large datasets, and intricate project plans in a single session.

- Speed and Efficiency: Turbo was designed to deliver responses at significantly higher speeds than GPT-4 while being more cost-effective, making it the preferred choice for enterprise-scale applications.

- Vision Processing: Advanced vision capabilities allowed it to analyze, interpret, and generate content from visual data. This feature proved especially valuable in industries like logistics, healthcare, and marketing.

- Task Automation: Introduced new automation features to streamline repetitive processes, from report generation to workflow optimization, boosting productivity across industries.

- Affordable Scalability: Despite its enhanced capabilities, GPT-4 Turbo was optimized for cost efficiency, enabling businesses to adopt powerful AI without overspending.

Gemini: From Gemini 1 to Gemini 2.0 Flash

Gemini 1 (2023):

- Google DeepMind’s initial foray into multimodal AI, designed to compete with OpenAI’s ChatGPT and Anthropic’s Claude.

- Focused on text and visual processing with basic integration across Google Workspace tools.

Gemini 1.5 (Mid-2024):

- Introduced enhanced multimodal capabilities, expanding support for audio and video processing.

- Integrated deeper into Google’s ecosystem, enabling seamless workflows across Docs, Sheets, and Slides.

Gemini 2.0 (Late 2024):

- Marked a monumental upgrade with advanced agentic AI capabilities, laying the groundwork for autonomous task completion.

- Introduced native support for audio and image generation, further enhancing its multimodal processing abilities.

- Significantly optimized for speed and scalability, enabling low-latency performance for complex workflows.

- The model powers tools like Project Astra, a visual system that helps identify objects and navigate environments, and Project Mariner, an experimental Chrome extension that automates browser tasks.

Gemini 2.0 Flash Thinking (End of 2024):

- Groundbreaking Reasoning Capabilities: Gemini 2.0 Flash Thinking can break down problems into smaller tasks, enabling more robust outcomes in reasoning-based challenges. For example, it solves physics problems by “thinking” through a series of steps, mimicking structured human reasoning.

- True Multimodal Leadership: Processes and generates text, images, audio, and video with unparalleled accuracy. Demonstrated its prowess in combining visual and textual reasoning, making it ideal for complex problem-solving scenarios.

- Enhanced Agentic AI: Refined task automation enables users to delegate intricate workflows without manual oversight, further empowering productivity.

Claude: From Claude 1.0 to 3.5 Sonnet

Claude 1.0 (2023):

- Focus on AI Safety: Anthropic launched Claude 1.0 as a model specifically designed with AI safety and ethical use at its core. Its primary goal was to minimize biases in generated outputs, ensuring reliability and fairness across various applications.

- High-Quality Contextual Understanding: Claude 1.0 excelled in delivering contextually aware responses, making it suitable for sensitive and professional use cases, such as policy drafting, legal document analysis, and strategic planning.

- Adoption in Sensitive Industries: Its safety-first approach made it popular in sectors like healthcare and finance, where the stakes for accurate and unbiased AI outputs were particularly high.

Claude 2.0 (Mid-2024):

- Expanded Context Window: With support for up to 100k tokens, Claude 2.0 significantly enhanced its ability to handle large-scale, complex datasets and extended conversations. This made it a game-changer for businesses requiring in-depth document analysis and multi-step reasoning.

- Improved Reasoning Capabilities: Claude 2.0 introduced advanced comprehension, enabling it to tackle intricate problem-solving tasks with greater accuracy and depth. This made it particularly appealing for research-driven industries and high-level strategy development.

- Reliability and Safety Reinforced: Businesses increasingly relied on Claude 2.0 for its consistent performance and commitment to ethical AI. Its robust safeguards against harmful or biased outputs bolstered its reputation as a trusted tool for critical tasks.

- Adoption Across Industries: Popular among enterprises, Claude 2.0 was used for tasks like regulatory compliance checks, legal contract analysis, and creating policy guidelines, thanks to its ability to process complex information accurately and ethically.

Claude 3.5 Sonnet (Late 2024):

- Unprecedented Context Window: Claude 3.5 builds upon the advancements of its predecessor, pushing the boundaries of context management with a 200k token capacity, the largest among its peers. This capability allows it to process entire books, extensive research papers, or large sets of legal documents in one session, providing unparalleled depth and continuity in AI-assisted workflows.

- Vision Capabilities and Enhanced Multimodal Processing: Claude 3.5 retains the vision functionality introduced in Claude 3.0 but enhances it further to provide seamless integration of text, images, and other visual data. It excels at tasks such as analyzing diagrams, interpreting charts, and synthesizing insights from combined textual and visual content. This refinement makes it ideal for industries requiring precision and multimodal collaboration.

- Introduction of “Computer Use”: Claude 3.5 introduces the groundbreaking “computer use” feature, enabling the model to interact with computer environments autonomously. It can perform tasks such as moving the cursor, clicking buttons, and typing text, effectively mimicking human interactions for automation of complex workflows. This feature is particularly impactful for administrative tasks, research assistance, and creative projects.

Enhanced Applications

Claude 3.5’s extended context, multimodal functionality, and autonomous capabilities open new doors for diverse industries:

- Education: Developing detailed curriculums with integrated visual aids and interactive learning modules.

- Finance: Generating advanced financial models that integrate textual, numerical, and visual data for comprehensive reporting.

- Healthcare: Supporting diagnostic tools by interpreting medical texts and images, aiding in early detection and treatment planning.

- Enterprise Automation: Automating repetitive administrative tasks like data entry, document formatting, and workflow management through “computer use.”

- Research and Development: Synthesizing large datasets and visual elements for cutting-edge innovations across disciplines.

Enterprise-Grade Reliability

Claude 3.5 remains the top choice for enterprises prioritizing precision, safety, and reliability. Its expanded capabilities and focus on ethical deployment ensure it meets the stringent demands of industries like healthcare, finance, and corporate strategy, making it a versatile and trustworthy partner in complex decision-making.

Qwen: From Qwen 1.0 to Qwen2.5

Qwen 1.0 (2023):

- Foundation of Multimodal AI: Qwen 1.0 marked Alibaba’s debut in the AI space, focusing on text-based conversational capabilities while laying the groundwork for future multimodal developments.

- Practical Applications: Primarily used in Alibaba’s ecosystem, Qwen 1.0 supported e-commerce platforms with chatbot integration for customer support, inventory queries, and personalized shopping experiences.

- Adoption Across Industries: Its ability to handle multilingual interactions made it appealing for global businesses requiring AI-driven customer communication.

Qwen 2.0 (2024):

- Introduction of Multimodal Capabilities: Qwen 2.0 brought significant advancements, integrating text and visual reasoning for applications requiring deeper context understanding, such as document analysis and product recommendations.

- Enhanced Multilingual Support: With robust language processing, Qwen 2.0 supported more languages and dialects, improving its adoption in diverse global markets.

- Scalability for Developers: Alibaba began offering Qwen 2.0 as an open-source model, allowing developers to customize and deploy it for specific use cases in retail, logistics, and education.

- Integration into Alibaba Cloud: Qwen 2.0 was embedded into Alibaba’s cloud services, enabling businesses to leverage the model’s AI capabilities for data processing, automation, and user experience enhancements.

Qwen2.5 (September 2024):

- Expanded Model Sizes: Qwen2.5 introduced models ranging from 0.5 billion to 72 billion parameters, catering to a broad spectrum of computational needs, from lightweight applications to large-scale enterprise projects.

- Advanced Multimodal Reasoning: Equipped with enhanced capabilities for text and visual data integration, Qwen2.5 excelled at tasks requiring multimodal reasoning, such as creating complex data visualizations, processing technical documents, and combining visual and textual analysis.

- Unprecedented Training Dataset: Qwen2.5 was trained on up to 18 trillion tokens, ensuring superior understanding and generation across multiple domains and languages.

- Open-Source Accessibility: Alibaba released over 100 open-source models in the Qwen2.5 family, fostering innovation and customization for developers worldwide.

- Introduction of QVQ-72B: A specialized variant, QVQ-72B, emphasized visual-textual reasoning, making it ideal for tasks such as AR/VR applications, e-commerce product previews, and interactive educational tools.

- Real-World Use Cases:

- Retail and E-commerce: Powering personalized shopping experiences through real-time visual and textual recommendations.

- Education: Assisting in multilingual content creation and interactive learning experiences.

- Healthcare and Research: Supporting data visualization and multilingual document analysis for global collaboration.

Feature Showdown, Best of Four…

| Feature | ChatGPT-4 Turbo | Gemini 2.0 | Claude 3.5 | Qwen2.5 |

|---|---|---|---|---|

| Model Strength | Versatile, optimized for creativity and logic | Multimodal integration and autonomous task handling | Context-rich, ethical, and capable of autonomous computer use | Multimodal reasoning and extensive parameter scalability |

| Context Window | Up to 128k tokens | Supports extended inputs | Up to 200k tokens, largest among its peers | Up to 72 billion parameters, training on 18 trillion tokens |

| Multimodal Capabilities | Text, images (vision processing enabled) | Text, images, audio, and video | Text, images, and enhanced multimodal processing | Visual and textual reasoning with multimodal support |

| Training Data | Extensive, up to late 2023 | Integrates Google’s datasets, including Workspace | Specialized focus on safety, ethics, and diverse data | Extensive datasets across multiple languages, domains |

| Speed | Fast | Extremely fast, optimized for real-time tasks | Moderate, prioritizes accuracy and safety | Optimized for diverse computational resources |

| Pricing | Free tier + Pro at $20/month | Included in Google’s ecosystem | Premium pricing, reflecting advanced capabilities | Open-source models, accessible and customizable |

| User Experience | Intuitive, user-friendly | Seamless for Google users | Reliable, geared toward ethical applications | Flexible, customizable for specific use cases |

| Core Focus Areas | General-purpose, creative writing, automation | Multimodal AI for business and content creation | Ethical AI for research, strategy, and administrative automation | Multimodal reasoning, coding, and multilingual tasks |

| Autonomy Features | Requires user input for most processes | Agentic AI, minimal human input required | Introduced “computer use” for automating tasks on desktop environments | Open-source flexibility with autonomy features |

| Real-World Use Cases | Content creation, chatbots, document analysis | Multimedia presentations, workflow automation | Strategic planning, automating administrative tasks, ethical decision support | Visual-textual reasoning, multilingual applications |

| Security and Safety | Data privacy safeguards and content filtering | Strong safety protocols integrated with Google systems | Advanced ethical safeguards, sandbox testing for new features | Open-source but with customizable safeguards |

| Ideal for | Creators, businesses, educators, casual users | Businesses leveraging Google services, multimedia creators | Researchers, enterprises, and industries requiring autonomous and ethical AI | Developers, researchers, and industries needing scalable AI |

| Notable Additions Over Previous Versions | Enhanced context window, faster processing, lower cost | Advanced multimodal capabilities, agentic features | Autonomous “computer use,” expanded multimodal functionality | Open-source QVQ-72B model, extensive language support |

| API Availability | Yes, widely available | Yes, integrated with Google’s APIs | Yes, enterprise-focused | Yes, open-source APIs available |

| Multilingual Support | Extensive, supports multiple languages | Strong language capabilities across global datasets | Strong multilingual understanding | Advanced multilingual processing across domains |

What Does the Internet Say?

gemini-2.0-flash-exp: The BEST vision model for daily-use, based on my personal testing

Big difference I have seen between Gemini Advanced and Chat GPT 4o

I am a ChatGPT man, but man oh Man I am impressed with the latest Gemini model

Conclusion

The advancements in conversational AI with ChatGPT-4 Turbo, Gemini 2.0, Claude 3.5, and Qwen2.5 showcase how rapidly the industry is evolving. Each of these models brings unique strengths to the table, making them ideal for different use cases:

- ChatGPT-4 Turbo excels in creativity, affordability, and versatility, making it the go-to choice for small businesses, creators, and anyone looking for a cost-effective yet powerful AI solution.

- Gemini 2.0 pushes the boundaries of multimodal capabilities and agentic AI, delivering speed and autonomy. It’s perfect for users deeply integrated into Google’s ecosystem or those requiring advanced reasoning and multimedia outputs.

- Claude 3.5 stands out for its ethical safeguards, unmatched contextual depth, and innovative “computer use” capabilities, positioning it as the top choice for enterprises in sensitive industries like healthcare, finance, and education.

- Qwen2.5 brings unparalleled flexibility through its open-source models and advanced multimodal reasoning. With scalable options and support for text, visual, and multilingual applications, it’s ideal for developers, researchers, and businesses seeking customizable AI solutions.

As AI continues to mature, choosing the right model depends on your specific needs. The agentic AI era is just beginning, and these tools are paving the way for a future where AI becomes an indispensable part of our lives.

Subscribe To Get Update Latest Blog Post

Leave Your Comment: